With the Functor-Applicative-Monad proposal just around the corner, I’ve been wondering what exactly the relation between Applicative and Monad is. Every Monad can be made a Functor with fmap = liftM where

liftM :: Monad m => (a -> b) -> m a -> m b

liftM f ma = do a <- ma

return (f a)

In fact, parametricity guarantees that fmap = liftM; it’s a free theorem. There is another law relating Functor and Monad, ma >>= f = join (fmap f ma). This law is particularly interesting because if you define a Monad the way mathematicians do using join instead of (>>=) then you must have Functor as a superclass in order to define (>>=). So it seems very natural for Functor to be a superclass of Monad.

However, it’s not so obvious to me why Applicative should be a superclass of Monad. It’s true that every Monad can be made an Applicative with (<*>) = ap where

ap :: Monad m => m (a -> b) -> m a -> m b

ap mf ma = do a <- ma

f <- mf

return (f a)

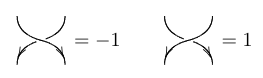

One should naturally expect that (<*>) = ap is a law when Applicative is a superclass of Monad. But, (<*>), unlike fmap, is not uniquely determined. A type constructor may have more than one instance. For example, lists have two useful instances.

ap :: [a -> b] -> [a] -> [b] ap fs as = [f a | a <- as, f <- fs] zap :: [a -> b] -> [a] -> [b] zap [] _ = [] zap _ [] = [] zap (f:fs) (a:as) = (f a) : zap fs as

ap delivers a list of all functions in the first list applied to all values in the second list while zap goes down the lists applying functions to values at the same index. So which do you choose? Well, ap is the one which is compatible with the Monad instance on lists, so it wins. zap is used as the Applicative instance for a newtype on lists, the ZipList. Interestingly, there is no compatible Monad instance on ZipLists.

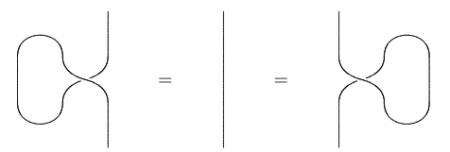

The ambiguity for lists resolves nicely enough. But in creating data types for concurrency, one runs into cases where you might want Applicative and Monad instances where (<*>) /= ap. A really good example can be found in the Haxl paper. Haxl is a library developed by Facebook to manage data requests in a way to maximize concurrency for the sake of efficiency. In section 4 of the paper, we find out that for the type constructor Fetch,

Blocked (Done (+1)) <*> Blocked (Done 1) = Blocked (Done 2) Blocked (Done (+1)) `ap` Blocked (Done 1) = Blocked (Blocked (Done 2))

I won’t go over the details here but the takeaway is that the Applicative can take advantage of looking at both arguments of (<*>) which are both in Fetch while the Monad cannot because it must use (>>=) the second argument of which is a function with pure input. So here we have an example of a law breaking Applicative/Monad. As with ZipList, there is no Monad instance which is compatible with the Applicative instance. So why not newtype? The motivation is that we want to be able to use concurrencly implicitly without the overhead of having to wrap and unwrap a newtype. Whether that justifies breaking the law is a judgment call. Notice however that the law breaks in a well defined way, that is (<*>) is equal to ap up to idempotency of Block, the relation that Block . Block = Block .

I want to work through another example that was inspired by concurrency in Purescript.

First lets take care of the imports.

import Control.Applicative import Control.Concurrent import Control.Concurrent.Async import Control.Concurrent.MVar import Control.Monad.IO.Class import Data.Traversable import Prelude hiding (mapM) import System.Random

Annoyingly we have to hide either the mapM from Prelude or Data.Traversable but this should be fixed by the Foldable/Traversable in Prelude proposal. The type constructor we’ll work with is for callbacks.

newtype Callback a = Callback {runCallback :: (a -> IO ()) -> IO ()}

You should recognize that Callback is isomorphic to ContT () IO, so we can just crib the definitions of Functor and Monad instances from your favorite source. We’ll also give a MonadIO instance.

instance Functor Callback where

fmap f c = Callback (\k -> runCallback c (k . f))

instance Monad Callback where

return a = Callback (\k -> k a)

c >>= f = Callback (\k -> runCallback c (\a -> runCallback (f a) k))

instance MonadIO Callback where

liftIO io = Callback (\k -> io >>= k)

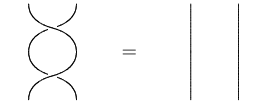

For the Applicative instance we want to take advantage of the ability to do our IO side effects concurrently. Let’s see how this works.

instance Applicative Callback where

pure a = Callback (\k -> k a)

cf <*> ca = Callback $ \k -> do

vf <- newEmptyMVar

va <- newEmptyMVar

let finish = do f <- takeMVar vf

a <- takeMVar va

k (f a)

race_ (runCallback cf (putMVar vf) >> finish)

(runCallback ca (putMVar va) >> finish)

Ok, so what’s going on the definition of (<*>)? Well first we create new empty mutable variables in which we will store a function f and a value a to apply it to, but which we must perform some IO effects to get. finish is where we perform the callback on the result. finish looks a whole lot like ap. The magic happens in the function race_ which is from Simon Marlow’s delightful async package. It runs two IO actions concurrently, canceling the loser. Because finish blocks when either vf or va are empty, both callbacks are run concurrently with a putMVar as the callback, filling up the variables. Then finish will unblock and the first branch to complete it wins the race.

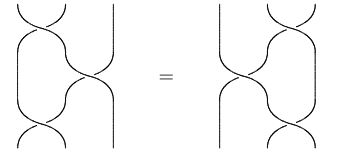

Let’s see the difference in performance between the Applicative and Monad instances on an example.

square :: Int -> Callback Int

square n = do liftIO $ do let second = 10^6

random <- randomRIO (0 , second)

threadDelay random

let time = fromIntegral random / fromIntegral second

putStrLn $ "Squaring "

++ show n ++ " after "

++ show time ++ " seconds."

return (n^2)

main = do putStrLn "Traversing [1..10] using Monad"

runCallback (mapM square [1..10]) print

putStrLn "Traversing [1..10] using Applicative"

runCallback (traverse square [1..10]) print

And here’s an example of what the output looks like.

Traversing [1..10] using Monad

Squaring 1 after 0.723773 seconds.

Squaring 2 after 0.603614 seconds.

Squaring 3 after 0.203097 seconds.

Squaring 4 after 0.535666 seconds.

Squaring 5 after 0.414605 seconds.

Squaring 6 after 0.807218 seconds.

Squaring 7 after 0.580662 seconds.

Squaring 8 after 0.715894 seconds.

Squaring 9 after 0.641902 seconds.

Squaring 10 after 0.180422 seconds.

[1,4,9,16,25,36,49,64,81,100]

Traversing [1..10] using Applicative

Squaring 4 after 4.8804e-2 seconds.

Squaring 5 after 0.140811 seconds.

Squaring 3 after 0.142185 seconds.

Squaring 7 after 0.373733 seconds.

Squaring 9 after 0.386626 seconds.

Squaring 8 after 0.393645 seconds.

Squaring 1 after 0.417399 seconds.

Squaring 6 after 0.780407 seconds.

Squaring 2 after 0.803894 seconds.

Squaring 10 after 0.96637 seconds.

[1,4,9,16,25,36,49,64,81,100]

The Monad is forced to perform the effects consecutively, taking up to 10 seconds (ignoring the time it takes to get a random number and to print). The Applicative performs the effects concurrently, taking only up to 1 second. So here’s another example where (<*>) /= ap and we may be somewhat justified by the desire for implicit concurrency. Notice that it’s just the effects that are different, not the result. Said another way, if we bind x <- mf <*> ma and y <- mf `ap` ma then we have that x = y so we’re breaking the law but not flagrantly.